Web development has grown into something unrecognizable from its inception. Instead of hosting your own server, web developers don't host or even pay for their own server. Sending static files to the server via FTP is ancient history. None of the files we write as web developers are truly static anymore; instead we have a build step that generates files into something incoherent to the human reader. This blog post will cover the good, the bad, and some tips to navigate this new era of compilation in web development using a Static Site Generator and a host for the statically generated site.

Preface

There are a variety of options for choosing a Static Site Generator and a host for your site. For reference, I will list some options below, but for the purposes of this blog post I will refer to Gatsby hosted on Netlify, as that's what we use for this site!

SSGs:

- Gatsby

- Next.js

- Nuxt.js

- 11ty

- Jekyll

- Hugo

Hosts:

- Gatsby Cloud

- Vercel (native host for Next.js)

- Netlify

- GitHub Pages

- GitLab Pages

- AWS Amplify

- Host yourself!

Build Times: The Good

Your website 'build' does a lot of really awesome things, all of which are meant to deliver a more performant website and drive better user experience. While each build varies between companies and developers, there are a few standard optimizations that SSGs like Gatsby do automatically. Optimizations such as:

- Minified JS

- Remove unused CSS

- Minified CSS

- Transpile JS to old browser syntax

- Prebuild HTML pages and upload them to CDN (this is what ‘Static Site Generation' is!)

- Asset processing & bundling

You can do all of these things yourself, without a static site generator. They are all customizable as well, but letting a static site generator take care of these will save you immense time and provide your site users with a great experience.

SSGs also automatically solve issues for you, ones that are inherently annoying and/or not directly related to building your site. By solving these problems for developers, we are able to spend more of our time building out product features and styles for our site, A.K.A. what we all would much rather do than configuration :). A few of these problems automatically solved for us are:

- Caching strategy and caching headers

- Web crawlability: by serving static HTML, web crawlers can index our site optimally and our SEO is already great

- Asset processing & bundling: yes, this is an optimization above. But this is a huge performance problem that is solved out of the box for us!

- Development and production builds

Lastly, using SSGs also opens the door for optional plugins and libraries designed for those SSGs. There are many plugins on Netlify that are incredibly easy to install and set up, oftentimes just one-click installs. Some helpful ones from Netlify include:

- Cypress - run your cypress tests as part of your build process; prevent a deploy if tests fail

- Essential Gatsby (including caching) - Speed up builds with a cache and other essential Gatsby helpers

- Gmail - send an email after a deploy succeeds/fails

- Lighthouse - generate a lighthouse report for the build, and configure to fail a deploy if your score is below a certain threshold

- Submit sitemap - automatically submit your sitemap to search engines after a successful deploy

There are many more plugins from Netlify as well, and as you can tell they do some magic to make the household chores of web development disappear. I highly encourage checking out the rest of the plugins from Netlify (and Gatsby's plugins too) to see what is possible. There is one huge downside to adding plugins: increasing your build time, the real reason we're writing this blog post.

Build Times: The Bad

Before I get into the dark side of SSGs & build times, let me come out and say, unequivocally, longer build times are worth it for your site to perform exceptionally well. While all the reasons below are painful, the magic that happens during build time greatly outweighs that pain.

There are 3 main pain points behind build times:

- Waiting sucks

- Wasting resources (& money)

- Build timeouts

Waiting sucks

Besides the fact you are sitting around waiting for a build to complete, with long build times you are also destroying the immediate feedback loop that most developers enjoy nowadays with things like Hot Module Replacement. Most development happens locally, but for times where you need to test a live site, do you really want to wait more than 5 minutes to see how things went? Netlify's default timeout is 15 minutes, and if you are waiting that long just for a timeout to occur, you are not getting much done that day.

Wasting resources (& money)

Building your site from Gatsby takes computing resources: a server needs to run the build process and memory needs to be allocated. A 45-minute build certainly beats running your own server 24/7, but Netlify charges by build minute. Those 45-minute builds will add up quickly, especially compared to <10 or <5 minute builds.

At Anvil, we're on a mission to eliminate paperwork, for many reasons. One of which is to help the environment. What's another way to help the environment? You guessed it: keep those build times low! Together, we can save the 🌴🎄🌳 and some ⚡️🔋🔌, all while saving some 💰🤑💸.

Build timeouts

Build timeouts and wasting resources are two reasons that go hand in hand. Timeouts are there specifically for preventing excessive resource usage and so you don't get charged 10x what you normally pay when your deploy is accidentally stuck in an infinite loop.

But doesn't that make timeouts a good thing? Yes. Except in the case where your site doesn't loop infinitely (I hope most of the time) and you are in a time crunch to get features out. Local development with SSGs like Gatsby relaxes the optimizations so you can develop faster. It's likely you made lots of changes that worked locally, only for the build to timeout when deploying to production. Incredibly frustrating and potentially release-ruining, yet build timeouts are still helpful and critical to have.

Avoiding your build timeout

Now let's dive into actually resolving the issue of long builds. This blog post was inspired by an urgent need to reduce our build time for this very site (useanvil.com), and all the tips below were the things we did to reduce our bloated build time from 55+ minutes down to <5 minutes, plus some others we might do in the future.

Audit your site

Before you significantly change any configuration, code, or assets, review your codebase and site and remove dead code.

Part of the magic that Gatsby provides is creating a page template for pages like blog posts, documentation, and other article-based pages. Then, you supply the data for each article (usually in Markdown) and Gatsby builds each page with that template. So it might seem like removing 1 JS file and a few lines of code won't make a big difference, but in reality that could be tens, hundreds or even thousands of pages that are built statically.

In our case, we removed an outdated template of the site and all the articles with it. A 1-minute change in our codebase yielded a 15+ minute build time reduction, from 55+ minutes to ~40 minutes.

Enable caching

We had caching enabled with this deprecated plugin already, but we did upgrade to the Essential Gatsby Plugin. Since we already had caching enabled, there wasn't a huge improvement in build times. But if your site is image heavy, your build time will be dramatically reduced after the first build to load the cache with pages and assets.

Compress images

I'm not talking about gzipping your images to be sent to the client when they visit your site. Gatsby (and all the other SSG's) take care of that for you.

I'm referring to before your build process even starts. There are two kinds of image compression: lossless and lossy. Lossless reduces file size without reducing image quality, and lossy reduces file size while reducing image quality (supposed to be imperceptible to the human eye, but that is for you to determine).

Using trimage, a cross platform lossless image compression tool, we reduced the images for ~120 different articles, totalling 20MB+ reduced. We can squeeze more out of our images if we use lossy compression, and run it on all images on our site. But in the short term we targeted the heavy hitters on our article-based pages.

I'd like to highlight that for this blog post and to meet our deadline, I only ran the compression tool on 2 directories with ~120 articles worth of images. To prevent us from getting into a build time hole again, I've set up the following bash script in a GitHub action, so we automatically compress png and jpg files as pull requests come in:

#!/bin/bash

# example usage: ./compress-images.sh -q src .exiftool.config

# to be used in GH action - need to install trimage & exiftool to use

# run this script on a directory or file to compress all pngs, jpgs, and jpegs

# if run on a directory, this will recurse to subdirectories

# this script will only attempt compression once on an image,

# afterwards the script will not run again -- this is done by adding a meta flag

# to the image file itself that signifies trimage compression was already run

VERBOSE=true

while [ True ]; do

if [ "$1" = "--quiet" -o "$1" = "-q" ]; then

VERBOSE=false

shift 1

else

break

fi

done

EXIFTOOL_CONFIG=$2

for filename in $1/**; do

if [[ -d "$filename" ]]

then

$VERBOSE = true && echo "Entering directory $filename"

$0 $filename $EXIFTOOL_CONFIG

fi

TRIMMED=$(exiftool -trimmed $filename | grep "true")

if [[ -e "$filename" && $TRIMMED != *"true" && ($filename == *".png" || $filename == *".jpg" || $filename == *".jpeg")]]

then

$VERBOSE = true && echo "Trimming $filename"

trimage -f $filename

exiftool -config $EXIFTOOL_CONFIG -trimmed=true $filename -overwrite_original

fi

donecompress-images.sh: bash script to compress all images in a given directory

Besides running trimage on all files, the script also uses a tool called exiftool that adds a meta flag to the compressed image. This is so we don't run trimage again on already compressed files. This doesn't have any impact on the build time for Gatsby/Netlify (the one this blog post is on), but this will save immense time (I'm talking hours, especially if your project has a lot of images) on pull requests that run this GitHub Action by avoiding compressing images twice.

#------------------------------------------------------------------------------

# File: example.config

#

# Description: configuration to enable trimmed flag for png and jpeg

#

# Notes: See original example @ https://exiftool.org/config.html

#------------------------------------------------------------------------------

# NOTE: All tag names used in the following tables are case sensitive.

# The %Image::ExifTool::UserDefined hash defines new tags to be added

# to existing tables.

%Image::ExifTool::UserDefined = (

# new PNG tags are added to the PNG::TextualData table:

'Image::ExifTool::PNG::TextualData' => {

trimmed => { },

},

'Image::ExifTool::XMP::Main' => {

trimmed => {

SubDirectory => {

TagTable => 'Image::ExifTool::UserDefined::trimmed',

},

},

}

);

%Image::ExifTool::UserDefined::trimmed = (

GROUPS => { 0 => 'XMP', 1 => 'XMP-trimmed', 2 => 'Image' },

NAMESPACE => { 'trimmed' => 'http://ns.myname.com/trimmed/1.0/' },

WRITABLE => 'string',

trimmed => { },

);.exiftool.config: config file to enable custom meta tag (trimmed) on PNGs and JPEGs

Here's a video I sped up of MBs dropping as I ran the above script with trimage and exiftool, something to the delight of developers everywhere:

First 1/4 of all files in src. You'll notice that it hangs around 106.4MB (and actually slightly goes higher in bytes). That is because the script is running on all files in src, including the blog-posts I already ran trimage on ad-hoc. The slight bump in bytes is exiftool adding the compression flag to metadata on the image.

Query only for what you need

Gatsby uses GraphQL to get data from Markdown-based articles, and for various other parts of your site. Each query takes time during your build so make sure you do 2 things to mitigate query time during your build:

- Only query for data you need - in our case, we had 1-2 fields on each article being queried (on over 100 articles)

- Only query for data once - we adhere to this one well, but avoid calling the same query in a different place. If possible, pass down the data via props to components that need it.

Enabling the upgraded Essential Gatsby plugin, compressing ~120 pages worth of images, and removing fields from GraphQL queries brought the build time down by another ~15 minutes, from ~40 minutes to ~25 minutes.

Keep up to date with package versions

This just in: technology gets better with time. It's true! Just like how the Mesopotamians invented the wheel to revolutionize their lives, we upgraded Gatsby from v2.x to v3.x (3.14.1 to be specific) and upgraded to the latest versions of our plugins which revolutionized our build time by another ~15 minutes! Just by upgrading major versions, we went from ~25 minutes to ~10 minutes.

Image compression on all the things

The above section was aimed at the first pass on our biggest directories of images. The second time around, running on our entire src directory, got us down from ~10 minutes to ~6m minutes.

Gatsby's experimental flags

The last hurdle is actually one we have yet to deploy to this site—I'm still playing around with the configuration, but using Gatsby's "experimental" flags has our site building in <5 minutes locally & in test environments. The ones I'm currently using and testing:

GATSBY_EXPERIMENTAL_PAGE_BUILD_ON_DATA_CHANGES=true- environment variable to turn on incremental page build, which only builds pages that have changed since the last build instead of building all the pages again. This is shipped as part of Gatsby V3, so if you are using it V3, you have this baked in.GATSBY_EXPERIMENTAL_QUERY_CONCURRENCY=32- environment variable that controls how many GraphQL queries are run in parallel. The default is 4.GATSBY_CPU_COUNT=logical_cores- environment variable that controls how many cores are used while building. The default isphysical_cores, and you can supply a definitive number like2instead of letting Gatsby calculate your physical or logical cores.PARALLEL_SOURCING: true- gatsby-config flag to run sourcing plugins in parallel. Requires Node v14.10 or higher.PARALLEL_QUERY_RUNNING- gatsby-config flag to run GraphQL queries in parallel. I would recommend using this overGATSBY_EXPERIMENTAL_QUERY_CONCURRENCY, since this is managed/optimized by the Gatsby team. Requires Node v14.10 or higher.FAST_DEV: true- won't help with your build timeouts, but will help speed up your dev build and dev page loading.

Extra stuff we didn't do (yet)

Gatsby has an entire page dedicated to all the different ways they recommend improving your build performance, which you can find here. The steps I've taken in this blog post and what Gatsby recommends are great ways to reduce your build time, but they are not the only ways! If you exhaust both lists, think outside the box about how you can effectively improve your build and site performance.

There are two actions from the Gatsby recommendations that I like:

- Parallelize your image processing - this is done natively on Gatsby Cloud; if you are like us and host your site on Netlify, this is the plugin to (experimentally) parallelize image processing as well.

- Optimize your bundle(s) - we have not had the need to do this yet, but auditing and optimizing your JS bundle(s) will help bring the build time down, as well as improve your site performance.

Gatsby specific problem - Node out of memory

One problem we did run into while hitting our timeout was Node running out of memory. We likely hit this problem since we pushed a lot of changes all at once. In reality this isn't a Gatsby problem, more of a Node problem. It just happens to affect a lot of Gatsby users, as you can find the issue and solution here.

So, if you run into something similar and get a stack trace like this while trying to build:

⠦ Building production JavaScript and CSS bundles

<--- Last few GCs --->

[19712:0x2dbca30] 45370 ms: Scavenge 1338.2 (1423.9) -> 1337.3 (1423.9) MB, 2.9 / 0.0 ms (average mu = 0.163, current mu = 0.102) allocation failure

[19712:0x2dbca30] 45374 ms: Scavenge 1338.4 (1423.9) -> 1337.5 (1423.9) MB, 2.8 / 0.0 ms (average mu = 0.163, current mu = 0.102) allocation failure

[19712:0x2dbca30] 45378 ms: Scavenge 1338.6 (1423.9) -> 1337.7 (1424.4) MB, 2.6 / 0.0 ms (average mu = 0.163, current mu = 0.102) allocation failure

<--- JS stacktrace --->

==== JS stack trace =========================================

0: ExitFrame [pc: 0x34eb54adbe1d]

1: StubFrame [pc: 0x34eb54a875c2]

Security context: 0x2caa7a21e6e9 <JSObject>

2: /*anonymous*/(aka /*anonymous*/) [0x23804dd52ac9] [/home/derek/dev/project1/node_modules/@babel/core/lib/transformation/file/merge-map.js:~155] [pc=0x34eb5534b963](this=0x01389b5022b1 <null>,m=0x1c2e6adbae29 <Object map = 0x11c6eb590b11>)

3: arguments adaptor frame: 3->1

4: forEach...

FATAL ERROR: Ineffective mark-compacts near heap limit Allocation failed - JavaScript heap out of memoryThen you need to increase your node heap size by setting the NODE_OPTIONS environment variable during build time to --max_old_space_size=4096

For Netlify users, that means doing one of the following (based on how you configure your project):

-

If you configure your project yourself, in your

netlify.tomladd this:[build.environment] NODE_OPTIONS = "--max_old_space_size=4096" -

If you use the Netlify dashboard to configure, go to

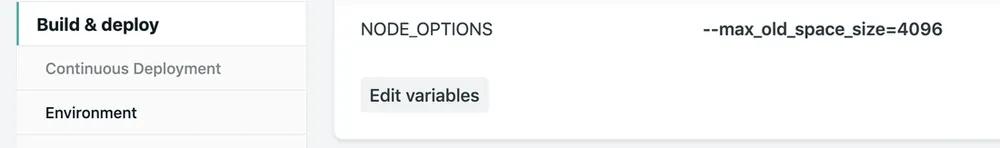

Build & Deploy > Environment. Add a variable calledNODE_OPTIONS, set it's value to literally--max_old_space_size=4096, with no quotations. Like this:

The quest for sub minute build times

Believe it or not, sub minute build times do exist. And for sites with an immense number of pages too. The easiest way to get there is to throw more computing power at it: Gatsby even mentions it as one of their tips to reduce build time. But for most of us, we don't have infinite compute resources at our disposal, and as we learned earlier we want to save some power and money!

I hope you learned something in this post, so you take heed and avoid your build timeout in advance of your big releases. Continue on for that sub minute build time, and even if it doesn't come today—remember that technology is getting better and more efficient everyday. If you have any questions or want to share your build optimization tips, let us know at developers@useanvil.com.

- SSR: Server Side Rendering

- ISG: Incremental Static (Re)Generation

- DSG: Deferred Static Generation